Home > Data Lake: Concepts and the 5 Best Practices

The explosion of data in modern enterprises presents an unprecedented challenge. Every day, organizations generate millions of data points: customer data, application logs, financial transactions, IoT data, social networks, etc. According to IDC, the amount of global data is expected to exceed 175 zettabytes by 2025. (IDC)

Faced with this deluge, traditional infrastructures such as relational databases or even data warehouses are reaching their limits. This is where the data lake comes in: a flexible, scalable, and cost-effective space to store and analyze massive volumes of information, whether structured or not.

But beware: poorly designed, a data lake can turn into a data swamp, a “muddy pool of data” impossible to exploit. How can this pitfall be avoided? The answer lies in applying proven practices, drawn from the best implementations observed in the industry.

Table of contents:

A data lake is a centralized repository that allows the storage of raw, semi-structured, or structured data, without prior transformation. It differs from the data warehouse by its flexibility and ability to absorb data of very different natures.

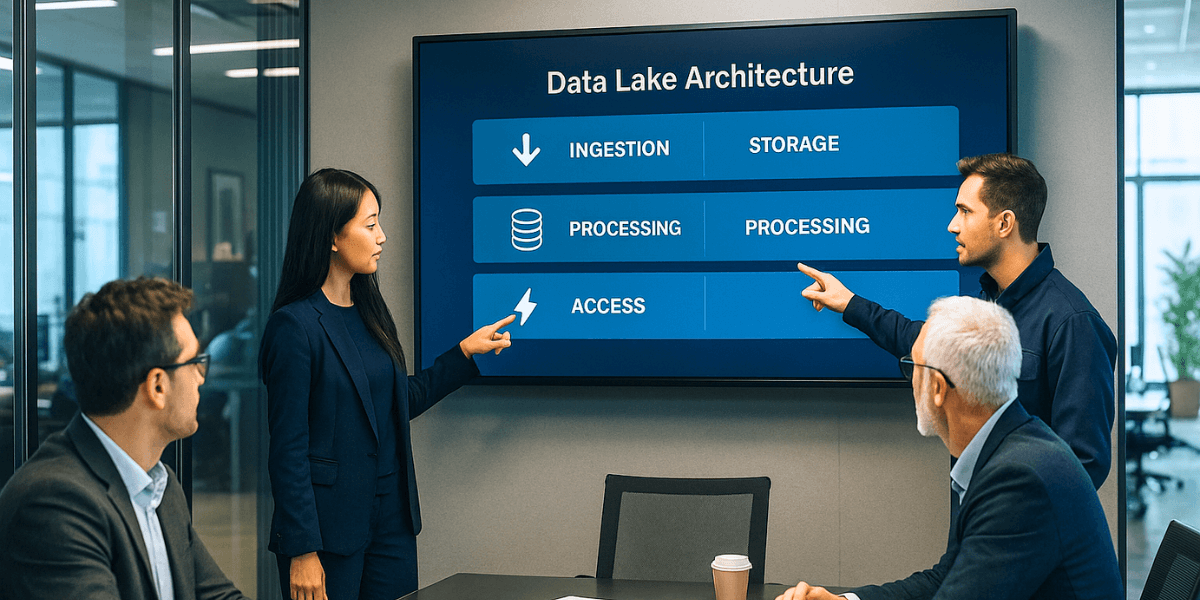

The main components of a data lake include:

Data lakes enable high-value use cases such as training AI and machine learning models, performing real-time IoT analytics, or detecting banking fraud at scale.

An IDC study on AWS data lake and AI/ML services found that organizations adopting these approaches experienced faster innovation, better data utilization, and reduced operational costs (Source: awsstatic.com).

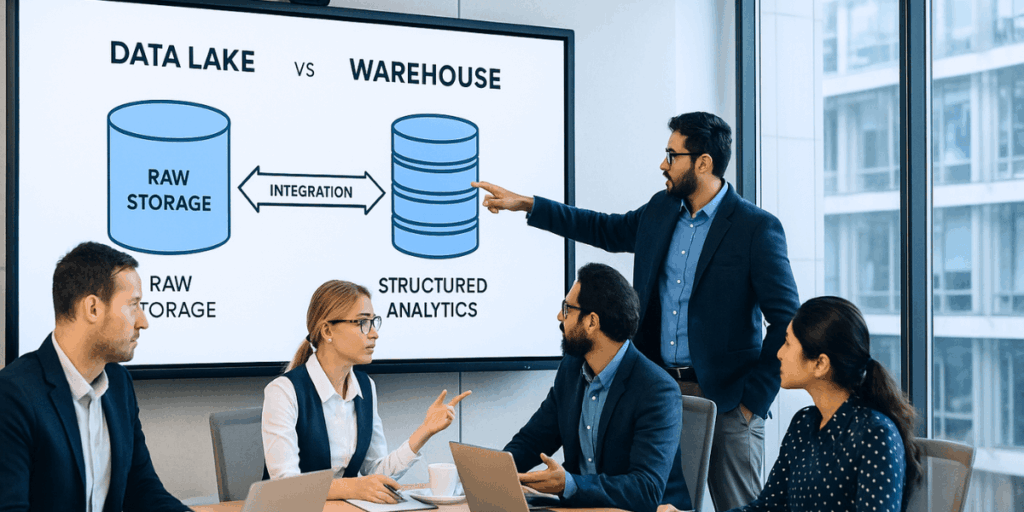

Many organizations wonder: should we choose between a data lake and a data warehouse? The answer is often “no,” because the two are complementary.

| Criterion | Data lake | Data warehouse |

|---|---|---|

| Structure | Raw data (multi-format) | Transformed and organized data |

| Use cases | Exploration, AI, machine learning | Reporting, dashboards |

| Scalability | Very high, massive storage | Limited by model optimization |

| Cost | More economical | More expensive (requires preparation) |

In practice, organizations often combine the two: the data lake as a raw reservoir, the data warehouse as the analytical layer.

Data governance is the cornerstone of a successful data lake. Without a defined framework, data accumulates in a disorganized way, leading to inconsistencies, duplicates, and risks of regulatory non-compliance.

Effective governance involves:

Benefits: improved trust in data, reduced analytical errors, and optimized business processes.

Metadata represent the key to reading the data lake. They describe the origin, format, creation date, and intended uses of the data. Without reliable metadata, a data lake becomes a “dark ocean” where navigation is impossible.

The data catalog centralizes this information. It acts as an internal search engine, allowing analysts and data scientists to quickly find the dataset they need.

Best practices:

Benefits: time savings in finding information, better data reuse, faster AI and machine learning projects.

Data lake security is not optional but an absolute necessity. According to the Cost of a Data Breach Report 2024 published by IBM Security and the Ponemon Institute, the global average cost of a data breach reached $4.88 million in 2024 (Source: ibm.com).

To protect a data lake, it is recommended to implement:

Benefits: reduced risk of cyberattacks, compliance with laws (GDPR, HIPAA, ISO 27001), protection of corporate reputation.

A poorly organized data lake quickly becomes expensive and inefficient. According to the AWS Well-Architected Framework, an optimized architecture can significantly reduce costs by selecting the right storage tiers and applying proper governance practices (Source: aws.amazon.com).

Essential practices:

Recent research also shows that advanced storage optimization can generate substantial savings. The SCOPe study demonstrates that automatically selecting the storage tier and compression level can reduce costs by 50–83% in cloud environments (Source: arxiv.org).

The greatest risk of a data lake is drifting into a data swamp, a muddy pool where data becomes unusable.

To avoid this, you need to establish a continuous monitoring and maintenance strategy:

Benefits: sustainability of the data lake, efficient data exploitation over the long term, reduced costs linked to poor data quality.

For a long time, companies viewed the data lake and the data warehouse as competing solutions. However, the most effective strategy is often to combine them. This integration provides both the flexibility of a data lake and the analytical power of a structured warehouse.

The data lake acts as a raw reservoir. It stores all data, whether structured, semi-structured, or completely unstructured. Application logs, IoT streams, customer data, documents, images… nothing is filtered at entry. This vast space serves as an innovation lab, particularly for machine learning projects or exploratory analysis.

In contrast, the data warehouse functions as an optimized analytical layer. Data entering it is transformed, organized, and indexed to respond quickly to queries. It is the ideal solution for business intelligence, financial reporting, or monitoring performance indicators.

This combination provides a strategic advantage:

This hybrid approach leverages the best of both worlds: flexibility and performance.

What is a data lake in computing?

A data lake is a centralized storage space that can hold all kinds of data, raw or transformed, for analytical use.

What is the difference between a data lake and a data warehouse?

The data lake stores raw and varied data, while the data warehouse contains structured data ready for analysis.

How can you prevent a data lake from becoming a data swamp?

By applying best practices: strict governance, cataloging, enhanced security, monitoring, and regular cleaning.

What are the advantages of a data lake?

Flexibility, scalability, cost reduction, easy integration of multiple sources, support for machine learning and big data.

ITTA is the leader in IT training and project management solutions and services in French-speaking Switzerland.

Our latest posts

Subscribe to the newsletter

Consult our confirmed trainings and sessions

Nous utilisons des cookies afin de vous garantir une expérience de navigation fluide, agréable et entièrement sécurisée sur notre site. Ces cookies nous permettent d’analyser et d’améliorer nos services en continu, afin de mieux répondre à vos attentes.

Monday to Friday

8:30 AM to 6:00 PM

Tel. 058 307 73 00

ITTA

Route des jeunes 35

1227 Carouge, Suisse

Monday to Friday, from 8:30 am to 06:00 pm.